DeepSeek: The Chinese AI Model That Shocked the World

On January 20, DeepSeek’s R1 model was unveiled, making a significant impact with its remarkable cost-effectiveness [1]. This model not only met expectations but also managed to surpass other models in some metrics, achieving this at a fraction of the cost, around 5.6 million USD. The primary reason for DeepSeek’s cost efficiency was its minimal training costs [2], which were much lower than Google’s Gemini or OpenAI’s ChatGPT-4 models. This achievement threatens NVIDIA’s monopoly on the GPU market and sets the stage for future competition, as prospective models can now utilize this hardware to give the best cost-to-performance ratio for training.'

What is DeepSeek?

DeepSeek is a startup established and owned by the Chinese stock trading company High-Flyer that specializes in the development of AI technologies. Their creation, Deepseek R1, is an innovative distilled large language model (LLM) that aims to deliver performance that exceeds expectations for its size. A distilled large language model is developed by using a more extensive model, such as ChatGPT-4, to train a smaller counterpart [3]. This process is similar to an expert guiding a beginner; the beginner only needs to grasp the essential skills to perform their tasks, rather than everything the expert knows. By leveraging larger models, DeepSeek has managed to create a model that is as powerful as the larger model, but at a fraction of the cost.

DeepSeek’s Capabilities

DeepSeek R1 is not just another large language model (LLM); it’s a reasoning model. This distinctive feature sets it apart from most LLMs, allowing it to outperform them. A reasoning model is an AI system that analyzes information through clear, step-by-step reasoning, much like humans think about their thinking [4]. Unlike traditional AI Models that give quick answers, reasoning models check their work by breaking down complex problems into smaller parts and explaining their thought processes. This ‘chain of thought’ method may take a bit longer, but it helps the model show and confirm how it arrived at its conclusions, making its decision-making more transparent and dependable. Another application of the reasoning process is to help users learn how to approach a problem, which can be used in future tasks.

Various tech YouTubers have put this model to the test by assessing their problem-solving capabilities. Mental Outlaw uploaded a video where they evaluated the performance of the DeepSeek R1 model against ChatGPT’s GPT-4o model. They asked questions on the models, such as the number of r’s in the word “strawberry.” While ChatGPT’s old model got confused and spit out the wrong answer, the DeepSeek R1 and the GPT-4o models managed to give the correct answer. However, DeepSeek R1 went further and added the locations of the letter r’s, demonstrating its superior reasoning capabilities.

Mental Outlaw also asked a trick question: “Johnny’s mother has three kids; the first is named Penny, the second is Nicole, and what is the third one’s name?” Both models managed to get this question correct as well. Mental Outlaw also tested the programming capabilities of the models by asking them to create a snake game. While DeepSeek R1 could not make a working code, ChatGPT-4o created a working code. However, it has bugs, especially when the snake tries to eat the food. Mental Outlaw also compared the performance of DeepSeek R1 against ChatGPT o1 in solving complex reasoning and math problems. ChatGPT performed better on those two areas. This weakness is probably due to the smaller data that the DeepSeek R1 was trained on.

Another YouTuber, Theo, uploaded a video demonstrating the prowess of DeepSeek R1. He compared the performance of ChatGPT o1 with DeepSeek R1 in solving problems from Advent of Code, which is a series of programming puzzles designed for all skill levels and can be solved with any programming language [5]. The DeepSeek R1 model was unable to complete writing the code for the Advent of Code 2021 Day 12 problem, thus Theo decided to complete it with DeepSeek v3, which it worked. They also tested it with a powerful non-reasoning model, Claude, but it unfortunately wasn’t able to successfully solve the problem. Aside from that, Theo showcased in his video that DeepSeek is much cheaper than ChatGPT o1, where tokens are cheaper compared to OpenAI. It costs $0.55 per million input tokens and $2.19 per million output tokens, which is much cheaper than OpenAI. This makes DeepSeek more attactive for developers and businesses.

Problems with Site Traffic and Blocked Words

One notable drawback of DeepSeek is the high volume of traffic the site receives due to the immense hype around their models. As of writing this article, I have run into problems in using DeepSeek R1 model, such as slow response times and occasional server errors. However, as the hype dies down and the site’s infrastructure is scaled up, it would be able to handle more site traffic, improving the user experience.

However, since DeepSeek is a Chinese company, it censors potentially sensitive topics, such as the infamous Tank Man who stood in front of a column of tanks in China [6]. This censorship policy can limit the user’s ability to explore certain topics. DeepSeek censors any inquiry about Tank Man as well as various sensitive areas in Chinese history such as Tianamen Square massacre, among others which are showcased in David Zhang’s video criticizing DeepSeek.

DeepSeek stops generating content about Tank Man while ChatGPT canHowever, ChatGPT has some stop words outlined in a Reddit post. Unlike DeepSeek, which censors specific events or political persons in China, banned words in ChatGPT revolve around people. Most of them don’t want ChatGPT to generate false information about them, which is reasonable.

A demonstration of ChatGPT stop wordsNVIDIA’s Stock Plunges After DeepSeek Announcement

On January 27, global investors sold off NVIDIA shares in response to DeepSeek’s latest AI Model release. Investors fear the AI Model will threaten NVIDIA’s dominance as the company designs powerful yet expensive technology powering the latest AI developments. The selloff erased $593 billion from NVIDIA’s market value—marking the largest single-day loss for any company in Wall Street history [7]. According to Brian Jacobsen, chief economist at Annex Wealth Management, if DeepSeek proves to be a superior AI Model, it could disrupt the AI investment narrative that has driven market growth over the past two years, as reported by Reuters.

In an interview with DDN published on February 21, NVIDIA CEO Jensen Huang challenged investors’ panic selling following DeepSeek’s release. He explained that DeepSeek’s reasoning feature, which performs multiple thought computations to generate higher-quality responses, demands more computing power, not less. Far from seeing DeepSeek as a threat, Huang expressed enthusiasm about R1’s open-source release, viewing it as an exciting development that could accelerate the adoption of AI Models [8].

Misleading Headlines Contribute to Controversy

The misleading headlines focusing on the $5.6 million cost of training the model contributed a lot to the controversy surrounding DeepSeek. However, SemiAnalysis, a semiconductor research and consulting firm, stated that it’s a small portion of the total cost. SemiAnalysis believes that their hardware could cost around $500 million, based on company history [9].

The reported $5.6 million cost in the paper only reflects the GPU expenses associated with the pre-training phase, which is a fraction of the overall cost of the model and does not include critical elements like research and development or the total cost of ownership of the hardware. According to SemiAnalysis, training Claude 3.5 Sonnet costs tens of millions of dollars, and there is still not enough funding for research and development, data collection and cleaning, employee compensation, and much more.

Conclusion

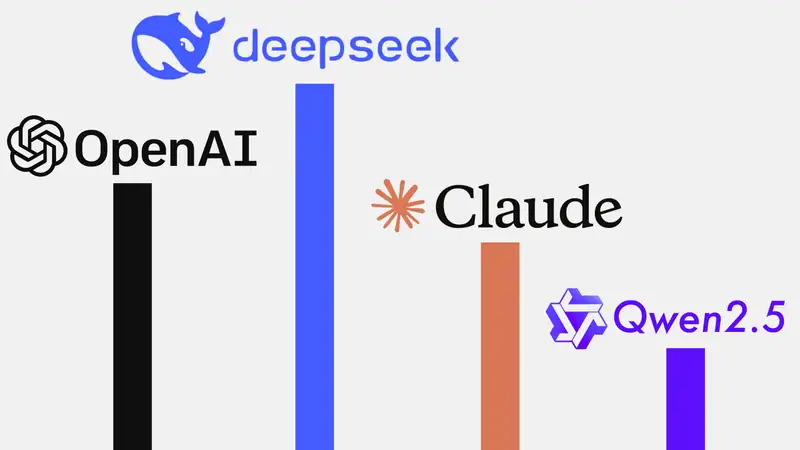

The latest large language model (LLM) developed by DeepSeek shook the AI landscape thanks to its impressive performance at a cheaper cost than their competitors such as Google’s Gemini and OpenAI’s ChatGPT-4. Developed by the Chinese startup DeepSeek, the DeepSeek R1 is a reasoning model that analyzes questions using clear, step-by-step reasoning. Although the model performed well in tests against other models, it struggled with more complex tasks such as programming. Its affordability makes it attractive for developers, but challenges like slow response times and censorship of sensitive topics in China are notable drawbacks. And with the latest release of Qwen 2.5, another open-source LLM developed by Alibaba [10], China positions itself as a strong competitor in the AI market.

References

[1] https://api-docs.deepseek.com/news/news250120

[2] https://arxiv.org/pdf/2412.19437v1

[3] https://www.youtube.com/watch?v=r3TpcHebtxM&si=5UKTy7hJbzsrsq5i

[4] https://www.ibm.com/think/news/deepseek-r1-ai

[5] https://adventofcode.com/2024/about

[6] https://www.youtube.com/watch?v=RyGqeyNsuOY

[7] https://www.reuters.com/technology/chinas-deepseek-sets-off-ai-market-rout-2025-01-27

[8] https://www.youtube.com/watch?v=F3NJ5TwTaTI

[9] https://semianalysis.com/2025/01/31/deepseek-debates/

[10] https://qwen-ai.com

[11] https://epoch.ai/blog/how-much-does-it-cost-to-train-frontier-ai-models

[12] https://api-docs.deepseek.com/quick_start/pricing

[13] https://openai.com/db-src/pricing/

[14] https://www.youtube.com/watch?v=MjtF8FFt33c